This blog post is written as a dialogue between two imaginary characters, one of them representing myself (H) and the other a stubborn straw man (S). It is broken into four parts: the dogma, the insight, the decoy, and the clues. If you do not feel like reading the whole thing, you can skip to part 4; it contains a summary of the other parts.

H: I need a straw man to make this a bit entertaining.

S: I can be your straw man what are we discussing today?

H: We are going to challenge a belief that pervades biological sciences, a very harmful one in my view.

S: How bold of you! People always summon straw men in these situations.

H: Okay. I get it. But please try to be stubborn. And ask good questions. I want whoever is reading this to deeply understand my arguments.

S: I can try.

Part I: The Dogma

S: So what is this harmful belief that you talk of?

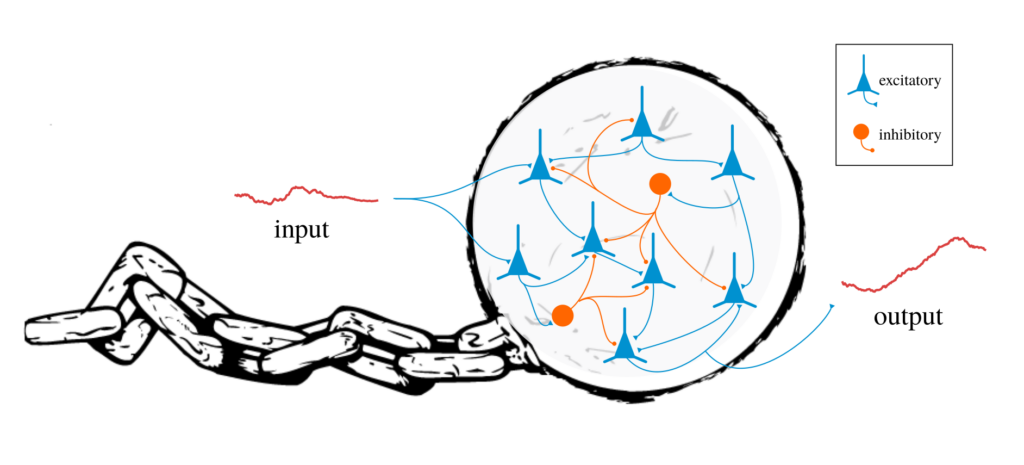

H: It is the belief that networks models and, more generally, dynamical systems can explain computation in biology. The assumption in neuroscience is that cognition and behavior is implemented by networks of neurons that inhibit or excite one another. And in developmental biology, the process of cell division and differentiation that can build an entire animal starting from a single cell, is thought to be implemented by networks of genes that regulate one another. Cell function is typically explained in a framework where networks of molecular events interact with one another. Network models dominate the way we think about biological computation.

S: I feel like I’ve heard this argument before. You are talking about connectionism. Right?

H: Well, not exactly. Connectionism is an extreme approach that applies to cognitive science. But my criticism is toward all of biology, including approaches that aren’t thought of as connectionist approaches. Virtually, the entire field of biology adheres to a paradigm of network models or dynamical system.

S: It sounds like you are criticizing a simplified caricature of biology. Networks are just one framework that people use to study things. Neuroscience, for instance, is not merely about studying networks of inhibitory/excitatory neurons. Sometimes single neurons can do complicated things and people study neurons at that level.

H: Like what?

S: A lot goes on inside a single neuron. Take dendritic computation for instance. Dendrites form a complex tree like structure that can transform a cell’s inputs in sophisticated ways. It has even been shown that single neurons are capable of implementing things like XOR gates on their inputs. Neurons are not always simple integrate-and-fire cells with monotonic input/output relationships.

H: Dendritic computation can be modeled as a network. I don’t see this as transcending the network paradigm because it is merely looking at a single neuron as a network of dendritic compartments. In the end you have a network of networks which is just a larger network.

S: Okay, but not everything is a network. For example, single neurons can modulate their intrinsic properties, like excitability. In fact, there’s a recent study that suggests the precise ion channel composition of neurons might be an indispensable part of understanding how songs are generated in songbirds.

And to make matters even more complicated, neuronal activity can be rapidly modulated by molecular factors. There’s another recent study in flies that shows that phosphorylation rates can be used to control a cell’s activity in a way that implements a cell instrinsic timer.

H: These happen to be some of my favorite papers! But they still fall within the paradigm of networks and dynamical systems. The paradigm I am talking about runs deep in all of biology, not just neuroscience. And it is not a caricature of the field. I don’t know of a single exception! Let me try to make it more clear.

Take any biological model, including any of the ones you brought up. Can you represent the state of the system as a finite set of variables, which change in time depending on the system’s state? Those variables can be expression levels of certain ion channels, phosphorylation rates of certain molecules, synaptic weights, or whatever you like. The rates at which each variable changes can depend on any number of other variables, including itself. Usually the dependencies are sparse, in which case it is convenient to draw it out as a sparse network. But the sparsity doesn’t really matter to me.

S: You’re describing dynamical systems.

H: Yes. The question is whether you can describe your model as a [finite-dimensional] dynamical system. Can you represent your model with a predetermined number of quantities and write down a number of equations that specify how those quantities change in time?

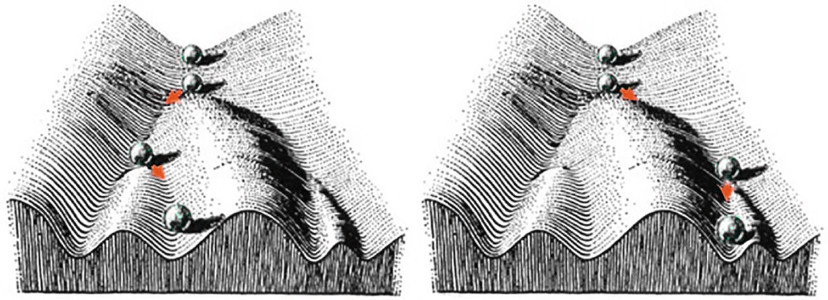

If your answer is yes, you are operating withing the paradigm that I am trying to challenge. You can see how this paradigm is not limited to neuroscience. It even guides our developmental models like Waddington’s conception of a genetic landscape and Kauffman’s boolean networks for gene regulation.

S: That’s what you are criticizing? Models that can be represented with a number of variables and differential equations?

H: Yes. You can always formulate our biological models as sets of differential equations with a finite number of variables. In a way, biology has been ignorant of computer science by sticking to a framework of finite dimensional dynamical systems.

S: Hold on a minute. Any physical model can be written as a set of differential equations with a finite number of variables. How is that being ignorant of computer science? Physics, at the fundamental level, is all differential equations.

H: That is precisely what everyone gets wrong about this. Some physically realistic systems cannot be understood as finite dimensional dynamical systems.

S: Like what?

H: Like Turing machines.

S: Isn’t that because Turing machines are mathematical abstractions that require infinite memory? In biology we’re concerned with things that can be physically instantiated, not mathematical abstractions.

H: Turing machines do not need infinite memory. In his original 1936 paper, Alan Turing did not endow his machine with an infinitely long memory tape. In fact he was explicitly concerned with things that are – in his own words – “calculable by finite means”. It is just that the description of a Turing machine does not include the length of the memory tape. In other words, you can describe how a Turing machine operates without specifying how long the memory tape is or specifying whether it can grow if it runs out of space.

S: I am lost. Every physical device contains a limited number of particles. And the laws of physics are differential equations. So does it not follow that every physical device is ultimately a finite dimensional dynamical system?

H: Excellent question. The answer is, surprisingly, “no”. Any closed device can be modeled as a finite dimensional dynamical system. But a device that can exchange particles with the environment might not be describable as a finite dimensional dynamical system. That doesn’t mean it’s an infinite dimensional system. (The nomenclature is confusing). At any given moment the device’s state is determined by a finite number of variables. But that number can increase as a result of the device recruiting more memory space by adding new components to itself. Adding new components to the system is like adding new variables and new differential equations. Think of a dynamical system that has a rule for adding new equations/dimensions.

So a system that grows in its number of parts might not be describable as a finite dimensional dynamical system.

S: Are you saying that we fail to consider that systems can grow in biology? What about cell division or neurogenesis? What about protein synthesis? People model growth all the time.

H: No that’s not what I said. What I am saying is that the models we use to study computation in biology always fall within the framework of finite dimensional dynamical systems. This is true even when the subject is cell division or dendritic spine growth. People capture growth with single variables, e.g. x = total population of cells or y = number of spines. You can always describe our biological models with predetermined number of variables that have predefined rules for influencing one another. The variables that are needed to simulate the model are known ahead of time.

That is a weak framework for studying computation. Not all physical systems are finite dimensional dynamical systems. Closed systems are. But systems that can grow are not.

S: But what is the alternative? Computers? If anything, it seems like computers are extremely closed systems. They are finite collections of wires, transistors, and circuit elements that obey the laws of physics. They don’t grow. So they can be described as dynamical systems. Right?

H: You might be able to describe a specific computer, like the one on my desk, as a physical object governed by a set of differential equations. But that misses the point. Computer science is mostly the study of computer programs, not of the physical devices which execute them.

Computers don’t grow but computer programs grow. A program can recruit more memory and vary in the amount of space it occupies as it progresses in time. When I say “this code runs with polynomial memory space”, I am being agnostic about how much hard disk is available on the computer that it is intended to run on. So it’s really a statement about the computer program not the computing device.

S: That’s an interesting way of looking at it. So what you’re saying is that computer programs can’t be modeled as dynamical systems because they might grow in the space they occupy in the computer’s memory, which means the number of variables needed to describe their state may increase through time.

H: Yes. And you may not know how many variables are needed to run a specific program ahead of time, because it can depend on the input to the program.

S: Okay, I see. Dynamical systems might not be able to describe computer programs. But are you suggesting they are also the wrong framework for describing biological systems too?

H: Yes, perhaps.

S: Why? Because biological systems grow?

H: Oh, no. You’re making it sound like the issue is growth. The only reason I brought up growth is to argue that dynamical systems are not general enough to describe all kinds of physical systems. I am afraid this is distracting us from the main point.

The main reason dynamical systems are so unsatisfactory is that they are computationally weak systems, realistically speaking that is. It’s about computation power, not growth.

S: In what sense are dynamical systems weak?

H: They are weak in terms of the scope of problems they can solve. There are some problems that cannot be solved using dynamical systems.

S: What is an example of a problem they cannot solve?

H: Here’s one easy example. No realistic finite-dimensional dynamical system can be used to simulate Turing machines.

S: Why should biologists care about simulating Turing machines?

H: Okay, forget Turing machines for now. I’ll give you another example. Consider this problem. Given a piece of code in your favorite programming language (e.g. Python or C++), determine the output of program. Realistically, dynamical systems cannot solve this problem.

S: You’re saying that dynamical systems are weak because they cannot simulate computer programs. But computer programs are digital and deterministic. Biology is analog and probabilistic. Why even compare the two?

H: To answer this I have to teach you something which I consider to be one of the most profound and mysterious discoveries of the 20th century.

Part II: The Insight

H: In the late 1920s and early 1930s, mathematicians and logicians were getting deeply philosophical. They were asking questions like “can we come up with an algorithm to decide if a given statement can be proved true?”. Or “Are there some numbers that can be defined but not calculated?”. Or “can we come up with a system for constructing functions that is general enough to include all calculable functions?”. There was a concerted attempt to formalize the notion of “computation”.

Three approaches came out of that era, that were developed independently of one another: 1) combinatory logic and lambda calculus, 2) general recursive functions, and 3) Turing machines and Post’s model. In the end, these three competing attempts at formalizing the notion of computation turned out to be equivalent. It wasn’t obvious at first. In fact, it took them years to realize this. All three systems are equally powerful. Each one can be used to simulate another.

This led people to the idea that they had found the limit of computability. It is common to state this idea in terms of Turing machines: “Turing machines are capable of computing any computable function” which is essentially what the Church-Turing thesis says. But you can replace “Turing machine” with any of the other systems. For instance, lambda calculus is capable of computing any computable function.

S: That’s why you keep bringing up Turing machines.

H: Yes! And you can see now that Turing machines aren’t really that special. They’re just one of many universal computation systems. When I bring it up you can replace it with any other equivalent system, even your favorite programming language. Because anything that can simulate a Turing machine is also capable of computing any computable function.

S: So if I understand correctly, there’s something called the Church-Turing thesis that says Turing machines can compute anything that can be possibly computed. And I can replace “Turing machine” with “Python” or “javascript” and it still holds true.

H: Yes!

S: Is this a proven fact?

H: Well this is where it gets a bit mysterious. The problem with proving the Church-Turing thesis is that it’s not entirely well-defined. You have to prove that Turing machines can compute anything that is computable. But what does it mean for something to be “computable”? The whole point of coming up with Turing machines was to formalize what it means to compute.

S: So it’s a definition! The Church-Turing thesis simply defines what it means to be computable. Right?

H: Some people seem to think it’s just a definition. I don’t. I think it’s claiming something substantive. A definition isn’t something that is true or false. But this is a statement that can in principle be refuted.

S: I still don’t get it. So is the Church-Turing thesis an empirical statement? Or is it a mathematical statement?

H: It’s unclear. From the very beginning there have been different interpretations. Gödel suggested that it can be proved from a set of axioms that define “effectively calculable”. In fact, Nachum Dershowitz and Yuri Gurevich, proved it in 2008.

S: So there is a proof for the Church-Turing thesis?

H: Yes, but you can always disagree with the axioms that they chose for that proof. Someone can say that their definition of computation was not general enough to encompass all kinds of computation. For example, their axioms allowed analog computations and real numbers but everything still has to happen in discrete steps. Computation has to be algorithmic according to their axioms.

S: That brings us to something I wanted to to say, actually. In the classical view, computation is something that is algorithmic – happening in discrete time steps. And there may be other assumptions about what it means to compute that don’t necessarily apply to the natural world.

H: Then let’s only consider the empirical interpretation of the Church-Turing thesis. If you show me a biological or physical system that can compute things that Turing machines cannot, then we shall say we have disproved the Church-Turing thesis. Non-algorithmic non-discrete computations are permitted. So far, no one has done this.

S: I guess I’ll have to take your word for it. But I’m still bothered by this whole approach. You are imposing a framework onto an area where it might not be applicable. Nature does things that cannot be well defined. It’s not mathematics or computer science where you have a clear separation of inputs and outputs. I mean, what are the inputs and outputs of my cat? What exactly is it computing? Does a redwood tree compute? What about a rock that absorbs sunlight and emits rays and changes shape with years of weathering?

H: Excellent questions. This is what I am claiming: You get to pick the system and you get to pick the inputs and outputs. As long as your system is realistic, anything that your system can be used to compute, Turing machines can also compute. I don’t care if your system is analog, probabilistic, or continuous in time. You cannot transcend the power of Turing machines unless the Church-Turing thesis is wrong (at least the physical/empirical interpretation of the thesis).

S: But my point is that sometimes we might not be able to define the inputs and outputs and specify how they’re related. For example, integer factorization is a well-defined computation problem. The input is a single number and the output is a series of numbers, uniquely determinable from the input. But if we’re talking about biology, well… what is the input to output mapping that a cat solves?

Perhaps you can say the inputs come from its sensory organs and its outputs are motor commands. But what time interval are we talking about? Are we talking about mapping instantaneous sensory stimuli to immediate motor commands? Surely, cats cannot be modeled that way; a cat can do different things in the same exact sensory situation. So the instantaneous input cannot be mapped to a unique output because a cat can use memory of past experiences to influence decision making. On the other extreme you might say that the cat maps a lifetime of input stimuli to a lifetime of motor commands to maximize survival or reproduction. But this is not the predefined input to output framework that is typical in computation theory. Most of a cat’s sensory inputs are the result of its previous decisions. A cat’s actions shapes its sensory inputs. And the sensory inputs along with a memory of past experiences is used to guide its next actions. There’s a constant feedback loop between inputs and outputs. Whereas, in integer factorization its just a straightforward predefined mapping.

H: You raise very good points. Here is how I see it. It is not that the cat as a whole organism is solving a specific input/output mapping. Rather, it is that the cat contains one or more computational systems that can be used to solve various problems when they come up.

An analogy that you may dislike but I think will be helpful anyway is a smart phone. What input/output relationship does your phone solve? The same issues you raised about past experiences and input/output feedback loops also applies to computing devices like smart phones. It is really difficult to describe smart phones as solving a unique input to output mapping. But your smart phone contains many applications, each containing many modules and functions. One module is responsible for drawing images on the screen; another may be responsible for converting information into packets to send over the network. At that level, the input to output relationships can be well defined.

Similarly, a cat’s behavior can be decomposed into simple tasks and computation problems. People characterize and study these smaller components in the field of animal behavior. My current favorite example is path integration: knowing where you are in space by integrating small movements. It is a well-defined computation problem, almost as well-defined as integer factorization! The inputs to the computation (orientation and speed) have to come from other computation modules and the output (position in space) can be used for all sorts of different things.

S: Okay, but even if we succeed in decomposing animal behavior into smaller components and modules, the functions are not necessarily as cleanly defined as it is for, say, path integration. In path integration there is a unique correct answer. But when an animal is searching for a mate or running away from a predator, there isn’t a unique correct answer. There can be many correct answers and the animal can just make a decision that is good enough. The classical conception of computation, with fixed input to output mappings, is not suited for these types of problems.

H: I disagree. In practice, many algorithms are heuristics or approximations that find an answer that is good enough. And very often computer programs use random generators to produce outputs that are variable and not always unique.

I think you can always formulate computation problems as classical input to output mappings. A problem that has more than one correct output can be reformulated as a decision problem that checks whether a candidate output is correct or “good enough”.

S: Your claim is that that Turing machines are this ultimate extremely powerful computing system that can solve anything that can be solved, including all problems that come up in biology. Where are you going with this?

H: Good. Being able to solve all computable problems is called “universal computation”. But let me reemphasize that its not just Turing machines that are universal. There are numerous systems that are also universal. Very little is required to achieve universal computation. Almost all programming language achieve it, even ones with ridiculously minimal components. Some systems have accidentally stumbled upon it. For instance, Wofram’s rule 110 or Conway’s game of life were not intended to be powerful computation systems. But with very simple rules, they are capable of computing any computable function (i.e. Turing-equivalent). So it’s not that Turing machines are extraordinarily powerful computers. It’s that dynamical systems and neural networks are pathetically weak.

S: Pathetically weak? That sounds unfair. What about all the recent success we’ve had with deep neural networks? Neural nets can beat humans in games like Go and Starcraft!

H: And what do these deep networks run on? Are they not computer programs running on digital computers?

S: Yes, but they can be implemented with networks of neurons too.

H: Maybe they can. But we are currently using digital computers and programming languages to train and run them, not analog circuits or networks of neurons. I think that attests to the generality of the Turing-equivalent systems. Notice that Go and Starcraft are both examples where there is no uniquely correct move. You just criticized the classical framework of computation theory for not being able to deal with these kinds of problems. Remember? Well here we have programs that are playing games! Isn’t it fascinating that we are still using the same CPU operations and architecture for this? Turing-equivalence is all you need. Dynamical systems, on the other hand, can’t even do what the simplest programming languages can do.

S: You need to be a little more concrete about the weakness of dynamical systems. I asked you for an example of something they can’t compute, and you said they can’t simulate Turing machines or run computer programs. But that’s not a very convincing example.

H: Well, to be honest it’s hard for me to give you more examples that I’m 100% sure about. But I can give you examples of things I suspect dynamical systems are realistically incapable of. Here’s one: given a string of open and close parentheses, e.g. “(()(()))()”, return its maximum depth.

Or here’s a yet simper one! Given a string of Xs and Os, determine if the number of Xs equals the number of Os.

S: Really? I have a hard time believing dynamical systems are incapable of solving this problem. It’s basically a counting problem.

H: Yes. I suspect that dynamical systems cannot be made to count up to arbitrarily large numbers. You can certainly make a dynamical system that will work for input sizes up to, say, 50 or 100 characters of Xs and Os. But there will always be a limit to how high your dynamical system can count.

S: Any physical system will have that limitation. Your computer has limited memory so it also has a limit to how large of a number can be stored on it. It can’t count up to infinity!

H: You’re confused again about the distinction between computing devices and computer programs. It’s the computer program (and also the computer architecture) that is Turing-equivalent. I can write a program that counts Xs and Os up to arbitrarily large numbers. If you run it on some huge input and it runs out of memory or batteries, that’s a limitation that came from your computing device – not from my program. You can then take the same program and run it on another computer with large enough memory and it will work. The crucial point is that it will work with more resources without changing the code.

On the other hand there is no description of a dynamical system that can count arbitrarily high. You can describe a dynamical system that counts up to a very large number. But if I give an input that exceeds that limit, you will have to redesign your dynamical system. The description of your dynamical system will have to change to deal with larger inputs.

S: Okay. But I can argue that biological systems don’t need to count up to arbitrarily large numbers.

H: Neither do computer programs for all of our practical purposes! But we still use universal computation systems in technology.

S: But what’s something that biology absolutely needs universal computation for? It sounds like universal computation is not necessary if the input size is restricted.

H: If the maximum input size of a problem is predetermined, you can solve that problem with even a look-up table – which is a far weaker than a dynamical system. But there are plenty of computation problems in biology where the input size is not predetermined.

S: Like what?

H: Oh, I can think of so many examples, from human speech recognition to insect path integration.

S: But there is a limit to how long a sentence practically gets or how far an insect will ever walk.

H: Yes, I suppose you are right. But it’s much simpler to describe a solution to those problems that doesn’t take that limit into account.

It’s like saying that we don’t need an algorithm for division, because you can just memorize a very large division table. By your logic, there is a limit to the set of numbers humans will ever encounter, so we can replace all of math with look-up tables. The problem is that the descriptions become excessively long and complicated if you want to solve problems this way.

You have to stop thinking of universal computation as this sophisticated level of computation power that would not evolve unless there is a need for it. It is rather a very accessible level of computation that can be reached – often accidentally – by implementing unsophisticated rules.

Let me ask you a question. Do you think cars need a universal computer to function?

S: Probably not. Cars were invented way before computers.

H: Indeed. In principle, cars don’t require a universal computation system to work. But then why do modern cars have dozens of microprocessors in them? (Microprocessors are based on the von Neumann architecture and Turing-equivalent). I’m talking about things like controlling the engine and breaks. That’s all done with microprocessors these days. In fact we have microprocessors in almost every modern device, including coffee machines, thermostats, toys, washing machines. Microprocessors are replacing the older analog circuit solutions. Not because these devices strictly need universal computers to work, but because universal computers are sufficient. In most situations it’s the simpler and more efficient way to compute.

S: I see your point. Although I am wary about generalizing what works for human technology to what works for biology. My intuition is that dynamical systems are a messier but more natural computation system. As long as they do the job, nature would not evolve a universal computer.

H: You are not alone. I believe this is how most biologists think. My intuition, however, is that it is extremely unlikely for nature to not have stumbled upon a universal computation system. And once such a system is found, it will spread and be used for almost everything that involves computation.

Part III: The Decoy

S: I am sorry to say, I have bad news for you. The entire premise of your argument is that dynamical systems and network models are computationally weak and that – unlike Turing machines and other universal computers – they cannot be used to compute any computatable function.

Well, I did a bit of research and it turns out you are wrong! In 1990 Cristopher Moore showed that you can simulate Turing machines using finite-dimensional dynamical systems. In the following years, several other authors independently proved that dynamical systems can be Turing-equivalent. There’s even a neural network version! In 1991, Hava Siegelmann and Eduardo Sontag showed that you can simulate Turing machines using a finite sized neural network.

According to what you said, anything that can simulate Turing machines can also be used to compute any computable function. So dynamical systems and neural networks are universal.

H: You are good at this. I am glad you brought up these studies. But they do not contradict my position. I have tried to phrase my arguments carefully. What I said is that realistic dynamical systems cannot be used to simulate Turing machines.

S: Oh boy. What do you mean by realistic? This better be convincing.

H: It will be. I will convince you on two fronts. First, a practical consideration regarding the resolution of physical quantities. Second, a more serious consideration regarding something called structural stability.

Let’s take a closer look at how these dynamical systems are able to simulate Turing machines. Turing machines are composed of a “memory tape” and and “machine head” that can manipulate the symbols on the tape. The machine head only needs to be as intelligent as a finite state automaton. So implementing the head using a dynamical system is easy. The tough part is implementing the memory tape. Because with finite-dimensional dynamical systems you only have a predefined set of variables at your disposal. But there is no limit to the number of symbols that can be on a Turing machine’s memory tape.

Do you know how these dynamical systems implement the memory tape? They do it by using numerical digits after the decimal point as a string of symbols! So the number 0.2381 is interpreted as a memory tape consisting of the symbols 2, 3, 8, and 1. Now if you want to add the symbol 6, all you have to do is add 6 and divide by 10 to obtain 0.62381. If you then want to retrieve a symbol, you multiply by 10 and round down. That’s how you can store unlimited information in a single number.

Although, to be precise, a single number is more like a stack than a bidirectional tape. You need two numbers (two stacks) to implement a bidirectional tape. And the example I used was in base 10. But you can represent your numbers in any base. Binary representations will do. You can even use three unary stacks to simulate Turing machines. But these details are not important. My point is that all of these dynamical systems use numerical digits as strings of symbols.

S: I agree that this is a strange model. I don’t think biological systems use numerical digits as strings of symbols. But these specific models are unrealistic because they are accomplishing the unrealistic goal of simulating Turing machines. Biological systems don’t need to simulate Turing machines.

My understanding is that simulating Turing machines is like a benchmark. If a system can be shown to do that, we know it can compute anything.

H: Your understanding is correct. But using numerical digits as symbols is critical for these systems. A necessary condition for universal computation is that the number of possible states not be restricted by the descriptor. The number of states for a computer program cannot always be determined from the code. It may depend on the input.

The number of variables in a finite-dimensional dynamical system is predetermined. If the amount of information that can be stored in each variable is bounded, then the number of states of that dynamical system is bounded by its descriptor. So it cannot be universal.

The only way around this is to come up with a way to store unlimited information in at least one those variable. That is why you have to use unlimited precision in these systems.

S: I am not convinced. Unlimited precision is unrealistic, yes. But so is unlimited memory. Don’t Turing machines also require unlimited memory?

H: Right. We assume no limits for memory or energy when describing Turing machines. And we’re fine with that. The problem is not that these systems assume unlimited precision. The problem is that physical quantities are, in practice, extremely limited in the amount of information they can store. What is the practical resolution of a neuron’s membrane potential, or the concentration of a specific chemical? Let’s be generous and say 10 orders of magnitude. That’s just over 30 bits of information. This places severe practical limitations on how much memory can expand in dynamical system. Compare it with computer programs that can practically recruit trillions of bits of information.

S: But the human brain contains billions of neurons and trillions of synapses. Even if each of these neurons/synapses contain a few bits of information, that seems plenty to me!

H: We are talking about how much memory can be added to a computation system, not how much information can be stored from the onset.

S: I don’t understand. How is a brain that contains billions of neurons and trillions of synapses different from a computer that contains billions or trillions of bits? Isn’t their memory capacity comparable?

H: The difference is that the amount of memory that is present in a computer is not part of a computer program’s descriptor. We spoke about this. You can give me a code that solves a specific problem without knowing how much memory is available on the computer that it will run on. Computers have a reservoir of general purpose memory that programs can recruit during computation. Any program, regardless of its purpose, can draw upon unused memory to complete a computation.

On the other hand, finite-dimensional dynamical systems and neural networks are not designed to work that way. There aren’t network architectures or dormant dimensions/variables that can be used as general purpose memory units. In the papers you cited from the 90s, memory expansion is achieved by expanding the precision of one, two, or three special variables. It is as if the dynamical system is drawing from a reservoir of decimal places of a specific physical quantity, not from a reservoir of neurons or network motifs.

S: But if I come up with a model that does work that way, a model that uses general purpose memory units, that model will be universal?

H: Yes, potentially. As long as the number of memory units is not part of your network descriptor your model might be universal. (Remember, the descriptor is what specifies the computation problem that it solves). I don’t know of any biologically plausible network models that work like this. The closest thing I’ve seen are what are called neural Turing machines.

S: Let’s take a step back. It seems that you agree that dynamical systems are capable of simulating Turing machines, and thus capable of computing any computable function. But your critique is that these systems are limited in how much their memory usage can increase once they are set into motion.

H: Yes. That is my first criticism. The limits on the precision or resolution of a physical quantity are much more severe than limits on how many components or particles can be added to a system.

The dynamical systems you cited, even if they can be implemented, can only increase their memory usage by something on the order of a hundred bits. While modern computer programs can increase memory usage by trillions or quadrillions of bits and not even be near any physical limitations.

But there is an even more serious problem with the dynamical systems you cited.

S: What?

H: All of the papers you’ve cited are dynamical systems that lack structural stability. Structural instability renders a system physically irrelevant. It is practically impossible to build a structurally unstable dynamical system or to find one to occur in nature.

In fact, there is a conjecture about this by Cristopher Moore, the very person you cited as first solving the Turing machine simulation problem. He conjectured in 1998 that “no finite-dimensional [dynamical] system capable of universal computation is stable with respect to perturbations, or generic according to any reasonable definition”.

This is why I say they are unrealistic.

S: And structural stability is widely accepted as a condition for being realistic?

H: Well, sort of. Moore argues that it is. A system that lacks structural stability needs to be implemented with infinite precision for it to resemble to intended dynamics. An error of one part per billion is enough to ruin it.

S: But it’s a conjecture, not a proof.

H: Right. There may be some ingenious method for getting structurally stable dynamical systems to be capable of universal computation. And maybe nature uses that method. But as far as we know, dynamical systems are realistically incapable of universal computation.

S: And in that case, your argument falls apart.

H: Yes. If Moore’s conjecture is wrong then my argument falls apart. Otherwise dynamical systems are realistically weak computation systems.

Part IV: The Clues

S: There is much I have learned from our discussion. Let me try to summarize your arguments so far. You have convinced me that not all physical systems can be modeled as finite dimensional dynamical systems. That is because some systems can grow but dynamical systems don’t have a rule for adding more dimensions going forward in time.

H: Yes!

S: You also claim that there is this level of computation power that is easy to reach. And once you have a system that reaches that level, it can compute anything.

H: Well, not anything. A universal computer can compute anything that is computable. There are some things that are non-computable, by any machine. But that’s a discussion for another time.

You’re doing a great job summarizing my points. Please, continue.

S: Okay. You also believe that dynamical systems do not reach that level of computation power.

H: Yes. Although, to be precise, we’re talking about finite-dimensional dynamical systems that are physically/biologically relevant.

S: But at the same time you claim that it is really easy to reach universal computation. You keep saying that people sometimes accidentally stumble upon it.

H: Yes. Universal computation only requires a number of simple rules of operation. My intuition for why realistic dynamical systems fail to reach it is that they cannot grow in their dimensionality, which is something computer programs easily do.

S: So then your point is that evolution must have also stumbled upon universal computation?

H: It is hard to believe that evolution would not have stumbled upon universal computation. And once it finds a system that does compute like standard computers do, the selective advantages would be enormous.

S: And how exactly does biology implement universal computation?

H: I don’t know. We need to discover it.

S: I know. But I want to get a better sense of what it is that you think we should be looking for. A common theme that keeps coming up is growth or adding new variables. You say that a computer does this by providing a reservoir of memory space that programs can recruit when needed. A computer program can grow in the memory space provided in the computer’s hardware. So should we be looking for neural network architectures that implement general purpose memory units? Or some sort of dynamical system that has dormant dimensions that can be recruited according to specific rules?

H: That might actually work. Network motifs that serve as general purpose memory might be a solution to universal computation. But I think they’re unlikely to exist, especially given the fact that we haven’t found anything yet.

I think the most promising place to search for a universal computation system is at the molecular level. RNA and DNA molecules are bizarrely well-suited to be used in a computation system. They are extremely stable, can be modified with very little energy, and most importantly are structured as a string of symbols from a limited alphabet.

S: I’m getting the sense that you don’t know what you’re talking about. You think RNA or DNA are used for general purpose computation? Isn’t there a consensus in biology that DNA and RNA are for encoding and building proteins?

H: I am aware that this is a highly controversial claim. But I am not the first to suggest these ideas. In fact, some biologists are converging on RNA and DNA as substrates for memory and computation from two very different angles.

From the side of cognitive science, there is a [radical] idea that has been gaining momentum over the past decade: that memories are stored not in the synaptic weights between neurons, but rather in the structure of specific molecules inside neurons. The most well-known proponent of this idea is Randy Gallistel. I highly recommend you check out some of his writings. His 2008 book was a great source of inspiration for me, but you can also check out this paper summarizing the debate. These ideas can actually be traced back to the 1960s, but the more recent articulation is much stronger and more convincing.

S: Is there any experimental evidence for it though?

H: There’s some evidence, although not indisputable. There’s some evidence from studies of how neurons learn and remember time intervals, and some more evidence from studies of how sea slugs sensitize to shocks. I can point to a few others but the experimental evidence is scattered and controversial at this point.

But let me tell you about the other angle that is also converging upon RNA and DNA as having a computational role. You may know that less than 1% of our DNA is actually encoding for proteins. Every year, more functional roles are being discovered for the non-protein-coding portion of DNA and RNA. There are some that believe that most of our genome might be functional. Resolving the extent of functionality in non-protein-coding RNA has been described as “the most important issue in genetics today”. You can check out this paper to see some of their arguments in the context of the ongoing debate in the literature.

S: These are indeed some very radical claims. I don’t see why we should take them seriously without strong empirical evidence.

H: Perhaps. But I believe the mainstream paradigms for cognition and development also need to be substantiated empirically. The failure of our models to clearly explain how genes turn into organisms or how networks of neurons turn into behavior, is itself a sign that our models are lacking something.

S: It’s still not clear to me how a biological model for universal computation might look like. How exactly can RNA or DNA be used to compute things? The neural network model with general purpose memory units is a lot more tangible to me.

H: That is a question I have been thinking about for years. Can we come up with a biologically plausible system based on RNA or DNA, that is universal in its computation power? Is it in principle achievable at the molecular level? The answer, I argue, is yes. I wrote a paper on how a set of plausible RNA editing rules can make it possible to compute any computable function, or even simulate Turing machines at the molecular level. This is something that realistic dynamical systems are incapable of. I did not have to assume any extraordinarily complex molecular machinery in the model. It actually consists of very simple molecular processes, not very different from what we know to exist in cells.

S: So this is how you think biology computes things? This makes things a lot more concrete. You should have brought it up in the first place.

H: Well, to be honest, I still don’t know what I believe in. It’s very likely that nature’s universal computer will look different than what we can predict from pure theory.

The point of my model was to demonstrate that universal computation is in principle attainable at the molecular level. It is easily within the reach of evolution.

S: I see. And then you argue that it must have evolved.

H: Or that life began with one in the first place. The rules that make universal computation possible can be so simple that it is conceivable that it occurred naturally, by chance. RNA predated DNA and proteins. Maybe life began when matter suddenly stumbled upon universal computation by accident.

This may surprise you but my intuition is that nature needs Darwinian evolution to construct an eye, but it doesn’t need Darwinian evolution to construct a universal computer.

S: And you think this RNA-based computation system is being used in cognition, development, and cell regulation.

H: I would like to believe so. But, again, we don’t know yet. We have to discover life’s computer. It probably works in ways that are hard to predict with what we know now.

S: Good luck trying to discover it. These ideas are very interesting but I am afraid you may be placing too much faith in theory. At the end of the day, it is empirical evidence that will sway people.

H: And I am worried that you are placing too much faith in what we think we know about biology. Between that and computation theory, I choose to place my faith in computation theory. Either biology magically circumvents the insights we have learned from the theory of computation and from the 1930s, or we just haven’t discovered how biology computes yet. I think the latter is more likely.

This isn’t something I can go off and discover by myself. It requires resources and dedicated researchers willing to work on it. That’s the reason I am trying to sway people. I have come to realize over the years that an important component of scientific progress is collectively deciding what problems are worthwhile. It would be quite sad if humans never discover how they think and what brings matter to life. As a species, we have limited resources and limited time on this planet. There is too much left to discover for me to be fine with people working in directions that I believe to be dead-ends.

S: I agree with your last point. Although what you are proposing may be a dead-end too. Deciding what problems are worthwhile is a subjective matter. But if it makes you feel better, this discussion is something I will be thinking about. for some time

H: Great! That’s what I was hoping for.

Thanks for the thought-provoking post.

If a finite dynamical system is allowed to evolve for an arbitrarily long time between getting the input and producing the output (i.e. the current state of the system determines whether computation is complete or the system should keep evolving), does it still have this limitation? The system is finite but the unfolded computation graph between the input and the output grows indefinitely.

Thanks! Yes, it would still be limited in its computational scope. We typically don’t assume any bounds on time/energy/space when analyzing the computational scope of these systems, not for Turing machines, and not for structurally stable finite-dimensional dynamical systems.